This post is part of the Skills Before Tools series based on my K-12 AI Implementation Guide. Each post unpacks one of the five core throughline skills that students need to use AI strategically and responsibly.

Purpose setting, questioning, and clarity in communication, which were covered in my previous blog posts, help students engage with AI more intentionally. Evaluation and judgment ensure that students remain in control of their thinking once the AI responds. Like the other foundational skills in this series, evaluation and judgment develop over time through explicit instruction, practice, and reflection. When schools prioritize these skills, students are positioned to use AI as a support for rather than a replacement of learning.

A Familiar Concern, Higher Stakes

I’ve heard people reference the early controversy over calculators when talking about AI in education. Many worried that calculators would be the end of mathematical thinking. They worried that offloading computation would negate the need for students to think. That concern was real and understandable at the time. However, the outcome for many students was totally different. The calculator took over basic computation. This freed students to focus on reasoning, problem solving, and wrestling with more complex mathematical problems.

AI presents a similar moment, but on a very different scale. Unlike calculators, which were largely confined to mathematics, AI is everywhere. Students are using it to generate ideas, explain concepts, summarize texts, complete writing tasks, answer questions across disciplines, and navigate their lives beyond school. AI’s impact isn’t limited to a single subject; it touches nearly every aspect of our lives.

That reach raises the stakes. To stay in charge of their thinking, students must be savvy evaluators of content. They need to use their judgment to examine information generally and AI’s output specifically. They need to cross-reference information (just like we ask them to do with any online research), ask better questions, and decide whether what has been generated is useful, accurate, unbiased, and aligned with their intent.

When students develop the skills to confidently evaluate and judge AI output, they’re doing far more than simply deciding whether or not to use a response. They’re practicing critical analysis, questioning assumptions, checking for accuracy and bias, and making intentional choices about their work.

In addition to higher-order thinking, this process also drives metacognitive skill building because students must monitor their own thinking as they use AI. They have to consider questions like,

- Do I trust this? Why or why not?

- Does this answer align with my stated purpose?

- What additional information is missing?

They must decide whether AI is supporting their thinking, misleading them, or quietly taking over their cognitive work.

Why Evaluation and Judgment Are Foundational Skills for AI Use

Evaluation and judgment have emerged as critical skills in current AI research and appear in many emerging frameworks that guide educators in teaching AI literacy. Increasingly, effective AI use is measured by how well a user can decide when to use AI, how to assess what it produces, and when to ignore or reject an output altogether. Effective use is less about generating responses; it is about making sound decisions about those responses (Mills et al., 2024). Current AI-literacy frameworks reflect this priority. Rather than treating judgment as an advanced or optional skill, frameworks like Digital Promise AI Literacy Framework position it as foundational to responsible use, highlighting the importance of assessing the credibility of AI outputs and identifying bias.

However, research on how young people assess AI-generated content reveals a significant gap between expectation and practice. While learners may attempt to judge credibility, they often lack clear criteria or strategies to explain and support their judgments (Choi et al., 2025; Lao et al., 2025). Without explicit instruction and practice, evaluation tends to be shallow, inconsistent, or influenced by how polished or confident the response sounds.

This gap between expectation and practice matters because evaluation and judgment are what separate using AI from overreliance on it. They determine whether AI supports decision-making or becomes the decision-maker. If we want students to be accountable for their thinking and work, we must help them cultivate these essential skills.

Evaluation and Judgment

Evaluation and judgment play a critical role at two points in the AI-supported learning process. The first comes before a tool is ever used: students must decide whether AI is appropriate for the task at hand. That decision should be informed by the purpose, context, and expectations of the task. Students must consider the purpose of the task, the expectations for AI use, and whether AI will meaningfully support their learning goal. These front-end decisions require students to pause and think intentionally about why they might benefit from using AI rather than defaulting to it.

The second point when students need the ability to evaluate and use their judgment is after AI produces a response. Once students have an output, evaluation and judgment shift to assessing quality, accuracy, and usefulness. Students analyze whether a response is complete, correct, and aligned with their original purpose. Once an output exists, evaluation shifts to assessing quality, accuracy, and usefulness. Students look for bias or missing perspectives, verify claims by cross-checking sources, question unsupported confidence, and decide what to revise, discard, or build upon.

At this stage in the process, AI provides content, but students remain responsible for determining what they use to inform their work.

Before Using AI: Deciding If and How to Use the Tool

Focus: Appropriateness and Intent

What am I trying to accomplish in this task?

Is AI an appropriate tool for this purpose? Why or why not?

What part of the task do I want support with?

What thinking do I still need to do myself?

What guidelines or expectations apply to AI use for this assignment?

During AI Use: Monitoring and Adjusting

Focus: Real-time Judgment and Decision Making

Does this response make sense based on what I already know?

How well does this output align with my original goal or prompt?

What seems unclear, incomplete, or questionable?

What assumptions or perspectives might be missing?

Do I need to refine my prompt or ask a follow-up question?

After AI Responds: Evaluating Quality and Usefulness

Focus: Verification and Accountability

Is this information accurate and credible? How do I know?

Where did I cross-check this information?

Does this output reflect bias, oversimplification, or gaps?

What will I use, revise, or discard? Why?

How did AI support my work without taking over the thinking?

Together, these skills position students as active decision makers throughout the process rather than passive recipients of AI-generated information. When students make intentional choices before and after using AI, they retain ownership of both the process and the product, which is the throughline of responsible, student-centered AI use.

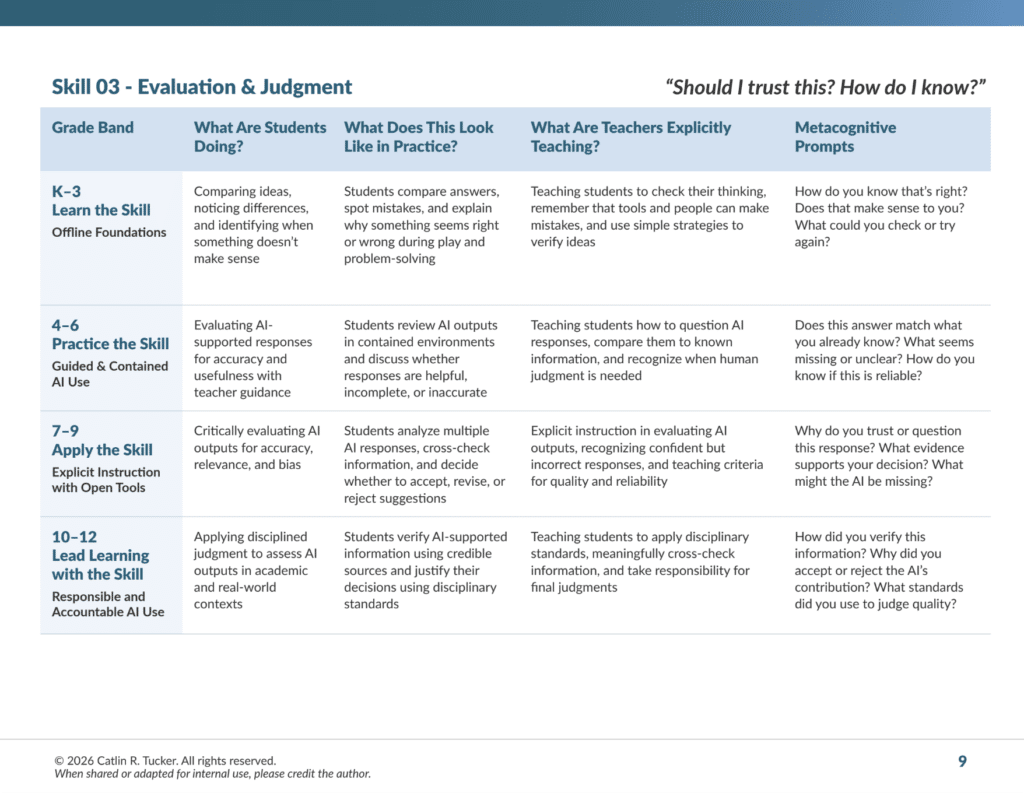

Evaluation and Judgment Across Grade Levels in K–12 Classrooms

Across grade levels, evaluation and judgment center on a set of consistent questions: Should I trust this? How do I know if I can trust it? As students progress through K-12, they move from simple checks for accuracy to more nuanced judgments about quality, bias, alignment, and usefulness. While the complexity of tasks, content, and expectations increases over time, the goal remains the same: students are responsible for their decisions before and after using AI.

Grades K-3: Building Evaluation Skills Through Meaning Making

In the early grades, students are beginning to build their capacity for evaluation and judgment offline. We must encourage them to notice differences, compare items and ideas, and recognize when something is not right or does not make sense. Teachers work to normalize the reality that ideas and information can be mistaken, whether they come from a person or a tool.

At this stage, evaluation is embedded in play, discussion, and problem-solving. Students might compare two strategies for solving a problem and explain which they prefer and why. They may work to spot an error in a pattern or explain why one answer is stronger than another. Reinforcing that noticing mistakes and working to correct them is an essential part of the learning process.

Reflection in these early grades is conversational. Teachers can prompt students to articulate their thinking with questions like:

- How do you know that is right?

- Does that make sense to you?

- Why did you do it this way?

- How could you check that or try again?

This early work helps students develop their ability to evaluate by encouraging them to think critically. They learn to ask questions, trust their reasoning, and understand that information and answers need to be examined.

Grades 4-6: Practicing Evaluation in Guided AI Use

As students begin interacting with AI in structured, teacher-moderated environments, they apply the evaluation and judgment skills they developed in kindergarten through third grade to AI-generated information. The skills they developed earlier, like questioning sources, comparing information, and identifying things that do not make sense, now transfer to their work with AI.

Teachers model the process of examining AI outputs. They guide students in reviewing AI-generated responses to discuss what is reliable, model how to check credibility, and identify what feels unclear or incorrect. At this stage, evaluation often happens collectively, with teachers guiding students to compare AI responses to other trusted sources. Students also begin making strategic decisions about how and why to use AI with teacher support. They must ask whether AI is appropriate for their task and what they are trying to accomplish by using it.

Reflection prompts help students practice making simple judgments about reliability and usefulness:

- Does this answer your question or help with the task?

- Does this response match what you already know?

- What is missing or unclear?

- How do you know if you can trust this information?

These guided experiences help students develop the habit of questioning AI outputs rather than assuming they are correct.

Grades 7-9: Applying Judgment with Increasing Independence

In middle and early high school, students begin applying evaluation and judgment more independently. At this critical point in their skill development, they are likely using a range of AI tools from chatbots to content creation tools for more complex academic tasks (e.g., research, essay planning, and problem-solving). Students make more decisions about whether AI is appropriate for a given task. They must consider factors like assignment guidelines, learning goals, and the type of thinking required. They begin to recognize situations where AI might shortcut important steps or where their own knowledge and reasoning are more valuable.

This work demands a deeper level of scrutiny into the tools they use, why they use them, and whether the content they produce is reliable. Students analyze AI outputs for accuracy, relevance, and bias. In addition, they must compare multiple responses and cross-check information against other sources.

Teachers provide explicit instruction on common issues, such as confident-sounding but incorrect answers, oversimplifications, unsupported claims, and missing perspectives. Students practice identifying these issues in AI outputs and responding to them, deciding what to accept, revise, or reject. At this stage, teachers shift from modeling evaluation to facilitating students’ independent practice. They step in to support more complex judgments and explicitly teach and model skills like cross-referencing, distinguishing between claims and evidence, and identifying when information requires further verification.

Reflection becomes more analytical at this stage. Teachers prompt students to justify their decisions with questions like:

- Why do you trust or question the accuracy of this response?

- What evidence supports your decision to accept, revise, or reject this response?

- How did you check the reliability of the information generated by AI?

- What might be missing from this response? How do you know?

These questions signal that judgment is an active process and that students remain responsible for evaluating information before using it in their work.

Grades 10-12: Leading with Disciplined Judgment and Accountability

By high school, evaluation and judgment are applied within disciplinary and real-world contexts. Students are expected to verify AI-supported information using credible sources, apply disciplinary standards, and justify their decisions with evidence. AI becomes one input among many. As a result, students must determine when it adds value and when other approaches, such as peer collaboration, primary research, or talking with experts, are more appropriate.

Students take responsibility for explaining why they chose to use AI for a task, how they evaluated its contribution, and what they ultimately decided to use from their collaboration with it. They consider disciplinary norms and expectations, recognizing that standards for evidence, style, and rigor vary across contexts. Teachers emphasize accountability, helping students understand that the final judgment is theirs and that it must be something they can defend.

Reflection prompts at this level require students to articulate their evaluative process clearly:

- Why did you choose to use AI instead of another tool or resource in this situation?

- How did you verify this information? What sources did you reference to confirm its reliability?

- Why did you accept or reject the AI’s contribution? What criteria did you use to make that decision?

- What disciplinary standards did you use to judge the quality of the AI’s output?

At this stage, evaluation and judgment position students to use AI confidently and responsibly. The goal is to ensure that technology supports rigorous thinking, not replaces it.

These Skills Matter Now More Than Ever

In an AI-rich learning environment, evaluation and judgment are essential. As AI systems generate fluent, confident, and often persuasive responses, students need the skills to decide when AI is appropriate to use, how much trust to place in its output, and when human judgment must take precedence.

When teachers explicitly teach evaluation and judgment—beginning with foundational questioning in early grades and building toward disciplined, independent analysis in high school—students remain accountable for their thinking. Students analyze AI-generated content, question accuracy and bias, cross-check information, and make intentional decisions about what to accept, revise, or reject.

In doing so, they become active decision-makers who use AI as a tool to support their learning while maintaining ownership of their work and their ideas.

The early concerns about calculators were never really about the tool itself. They were about whether students would stop thinking. What we learned over time is that tools only replace thinking when judgment is absent. The same is true with AI. When students are taught to evaluate, question, and make intentional decisions, the tool doesn’t diminish thinking; it has the potential to deepen it.

Ultimately, evaluation and judgment are what allow students to navigate an increasingly complex information landscape with discernment, integrity, and agency.

Up Next: Revision and Improvement

In the next post in this Skills Before Tools series, I’ll focus on revision and improvement, another set of essential skills students need to use AI effectively.

If you are looking for support as you navigate these conversations about AI implementation in your school or district, you can download the Skills Before Tools: A K-12 AI Implementation Guide. The guide is designed to help teams ground AI decisions in shared language, developmental progressions, and transferable skills. I am also available to support this work through professional learning, coaching, or discussions on implementation.

Download your copy of Skills Before Tools: A K-12 Guide to AI Implementation.

Choi, W., Bak, H., An, J., Zhang, Y., & Stvilia, B. (2025). College students’ credibility assessments of GenAI‑generated information for academic tasks: An interview study. Journal of the Association for Information Science and Technology, 76(6), 867–883. https://doi.org/10.1002/asi.24978

Lao, Y., Hirvonen, N., & Larsson, S. (2025). AI and authenticity: Young people’s practices of information credibility assessment of AI‑generated video content. Journal of Information Science. Advance online publication. https://doi.org/10.1177/01655515251330605

Mills, K., Ruiz, P., Lee, K., Coenraad, M., Fusco, J., Roschelle, J., & Weisgrau, J. (2024). AI literacy: A framework to understand, evaluate, and use emerging technology. Digital Promise. https://files.eric.ed.gov/fulltext/ED671235.pdf

No responses yet